Publications

Please find the list of my publications.

2008

W. Broll, I. Lindt, I. Herbst, J. Ohlenburg, A. Braun, and R. Wetzel.

Towards Next-Gen Mobile AR Games.

Computer Graphics and Applications 28(4): 40-48, 2008. PDF

W. Broll, I. Lindt, I. Herbst, J. Ohlenburg, A. Braun, and R. Wetzel.

Towards Next-Gen Mobile AR Games.

Computer Graphics and Applications 28(4): 40-48, 2008. PDF

J. Ohlenburg, W. Broll, and I. Lindt.

Orchestration and Direction of Mobile MR Games.

Chi 2008 Workshop - Urban Mixed Realities: Technologies, Theories and Frontiers, 2008. PDF

J. Ohlenburg, W. Broll, and I. Lindt.

Orchestration and Direction of Mobile MR Games.

Chi 2008 Workshop - Urban Mixed Realities: Technologies, Theories and Frontiers, 2008. PDF

Abstract: Mobile Mixed Reality Games are embedded in the physical environment of a user and pose therefore new

requirements on design, authoring, orchestration and direction of the game. New supporting tools are

required to meet the challenges of such games and tocreate an exciting and coherent experience for the

users.

In this paper we focus on the aspects of orchestration and direction of Mobile MR Games and how tools can

support the developers and the people setting up such games. We highlight also the fact that supporting tools

might become an important game element.

2007

I. Lindt, J. Ohlenburg, U. Pankoke-Babatz, and S. Ghellal.

A Report on the Crossmedia Game Epidemic Menace.

In ACM Computers in Entertainment (CIE) volume 5, issue 1 (Jan. 2007), 2007. PDF

I. Lindt, J. Ohlenburg, U. Pankoke-Babatz, and S. Ghellal.

A Report on the Crossmedia Game Epidemic Menace.

In ACM Computers in Entertainment (CIE) volume 5, issue 1 (Jan. 2007), 2007. PDF

Abstract: Crossmedia games employ a wide variety of gaming interfaces based on stationary

and mobile devices to facilitate different game experiences within a single game. This article

presents the crossmedia game Epidemic Menace, introduces the game concept, and describes experiences

from two Epidemic Menace game events. We also explain the technical realization of Epidemic Menace,

the evaluation methodologies we used, and some evaluation results.

J. Ohlenburg, W. Broll, and I. Lindt.

A Device Abstraction Layer for VR/AR.

In International Conference on Human-Computer Interaction (HCI 2007), 2007. PDF

J. Ohlenburg, W. Broll, and I. Lindt.

A Device Abstraction Layer for VR/AR.

In International Conference on Human-Computer Interaction (HCI 2007), 2007. PDF

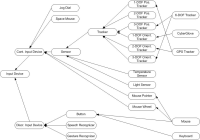

Abstract: While software developers for desktop applications can rely on

mouse and keyboard as standard input devices, developers of virtual reality (VR) and augmented

reality (AR) applications usually have to deal with a large variety of individual interaction

devices. Existing device abstraction layers provide a solution to this problem, but are usually

limited to a specific set or type of input devices. In this paper we introduce DEVAL – an

approach to a device abstraction layer for VR and AR applications. DEVAL is based on a device

hierarchy that is not limited to input devices, but naturally extends to output devices.

M. Wittkämper, I. Lindt, W. Broll, J. Ohlenburg, J. Herling, and S. Ghellal.

Exploring Augmented Live Video Streams for Remote Participation.

In Conference on Human Factors in Computing Systems (CHI 2007), 2007. PDF

M. Wittkämper, I. Lindt, W. Broll, J. Ohlenburg, J. Herling, and S. Ghellal.

Exploring Augmented Live Video Streams for Remote Participation.

In Conference on Human Factors in Computing Systems (CHI 2007), 2007. PDF

Abstract: Augmented video streams display information within the context

of the physical environment. In contrast to Augmented Reality, they do not require special equipment,

they can support many users and are location-independent. In this paper we are exploring the

potentials of augmented video streams for remote participation. We present our design considerations

for remote participation user interfaces, briefly describe their development and explain the design

of three different application scenarios: watching a pervasive game, observing the quality of a

production process and exploring interactive science exhibits. The paper also discusses how to

develop high quality augmented video streams along with which information and control options are

required in order to obtain a viable remote participation interface.

2006

J. Ohlenburg and W. Broll.

Parallel multi-view rendering on multi-core processor systems.

In SIGGRAPH '06: ACM SIGGRAPH 2006 Research posters, 2006. PDF Poster

J. Ohlenburg and W. Broll.

Parallel multi-view rendering on multi-core processor systems.

In SIGGRAPH '06: ACM SIGGRAPH 2006 Research posters, 2006. PDF Poster

I. Lindt, J. Ohlenburg, U. Pankoke-Babatz, W. Prinz, and S. Ghellal.

Combining Multiple Gaming Interfaces in Epidemic Menace.

In CHI '06: CHI '06 extended abstracts on Human factors in computing systems, pages 213-218, 2006. PDF

I. Lindt, J. Ohlenburg, U. Pankoke-Babatz, W. Prinz, and S. Ghellal.

Combining Multiple Gaming Interfaces in Epidemic Menace.

In CHI '06: CHI '06 extended abstracts on Human factors in computing systems, pages 213-218, 2006. PDF

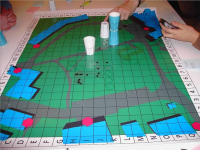

Abstract: This paper presents the multiple gaming interfaces of the crossmedia

game Epidemic Menace, including a game board station, a mobile assistant and a mobile Augmented

Reality (AR) system. Each gaming interface offers different functionality within the game play.

We explain the interfaces and describe early results of an ethnographic observation showing how

the different gaming interfaces were used by the players to observe, collaborate and interact

within the game.

I. Lindt, J. Ohlenburg, U. Pankoke-Babatz, and S. Ghellal.

A Report on the Crossmedia Game Epidemic Menace.

ACM Computers in Entertainment (CIE), 5(1), 2007. PDF

I. Lindt, J. Ohlenburg, U. Pankoke-Babatz, and S. Ghellal.

A Report on the Crossmedia Game Epidemic Menace.

ACM Computers in Entertainment (CIE), 5(1), 2007. PDF

Abstract: Crossmedia games employ a wide variety of gaming interfaces based

on stationary and mobile devices to facilitate different game experiences within a single game.

This article presents the crossmedia game Epidemic Menace, introduces the game concept, and

describes experiences from two Epidemic Menace game events. We also explain the technical

realization of Epidemic Menace, the evaluation methodologies we used, and some evaluation

results.

W. Broll, J. Ohlenburg, I. Lindt, I. Herbst, and A.-K. Braun.

Meeting Technology Challenges of Pervasive Augmented Reality Games.

In NetGames '06: Proceedings of 5th ACM SIGCOMM workshop on Network and system support for games, 2006. PDF

W. Broll, J. Ohlenburg, I. Lindt, I. Herbst, and A.-K. Braun.

Meeting Technology Challenges of Pervasive Augmented Reality Games.

In NetGames '06: Proceedings of 5th ACM SIGCOMM workshop on Network and system support for games, 2006. PDF

Abstract: Pervasive games provide a new type of game combining

new technologies with the real environment of the players. While this already poses new

challenges to the game developer, requirements are even higher for pervasive Augmented

Reality games, where the real environment is additionally enhanced by virtual game items.

In this paper we will review the technological challenges to be met in order to realize

pervasive AR games, show how they go beyond those of other pervasive games, and present

how our AR framework copes with them. We will further show how these approaches are applied

to three pervasive AR games and draw conclusions regarding the future requirements regarding

the support of this type of games.

U. Pankoke-Babatz, I. Lindt, J. Ohlenburg, and S. Ghellal.

Crossmediales Spielen in Epidemic Menace.

In A. M. Heinecke, H. Paul: Mensch & Computer 2006: Mensch und Computer im StrukturWandel. PDF

U. Pankoke-Babatz, I. Lindt, J. Ohlenburg, and S. Ghellal.

Crossmediales Spielen in Epidemic Menace.

In A. M. Heinecke, H. Paul: Mensch & Computer 2006: Mensch und Computer im StrukturWandel. PDF

J. Ohlenburg, I. Lindt, and U. Pankoke-Babatz.

A Report on the Crossmedia Game Epidemic Menace.

In 3rd International Workshop on Pervasive Gaming Applications (PerGames 2006), 2006. PDF

J. Ohlenburg, I. Lindt, and U. Pankoke-Babatz.

A Report on the Crossmedia Game Epidemic Menace.

In 3rd International Workshop on Pervasive Gaming Applications (PerGames 2006), 2006. PDF

Abstract: Crossmedia games employ a wide variety of gaming interfaces based on stationary and mobile devices to

facilitate different game experiences within a single game. This article presents the crossmedia game Epidemic

Menace, introduces the game concept, and describes experiences from two Epidemic Menace game events. We

also explain the technical realization of Epidemic Menace, the evaluation methodologies we used, and some

evaluation results.

2005

W. Broll, I. Lindt, J. Ohlenburg, I. Herbst, M. Wittkämper, and T. Novotny.

An Infrastructure for Realizing Custom Tailored Augmented Reality User Interfaces.

IEEE Transactions on Visualization and Computer Graphics (TVCG), 11(6):722-733, 2005. PDF

W. Broll, I. Lindt, J. Ohlenburg, I. Herbst, M. Wittkämper, and T. Novotny.

An Infrastructure for Realizing Custom Tailored Augmented Reality User Interfaces.

IEEE Transactions on Visualization and Computer Graphics (TVCG), 11(6):722-733, 2005. PDF

Abstract: Augmented Reality (AR) technologies are rapidly expanding into new application areas.

However, the development of AR user interfaces and appropriate interaction techniques remains a complex

and time-consuming task. Starting from scratch is more common than building upon existing solutions.

Furthermore, adaptation is difficult, often resulting in poor quality and limited flexibility with

regard to user requirements. In order to overcome these problems, we introduce an infrastructure for

supporting the development of specific AR interaction techniques and their adaptation to individual

user needs. Our approach is threefold: a flexible AR framework providing independence from particular

input devices and rendering platforms, an interaction prototyping mechanism allowing for fast

prototyping of new interaction techniques, and a high-level user interface description, extending

user interface descriptions into the domain of AR. The general usability and applicability of the

approach is demonstrated by means of three example AR projects.

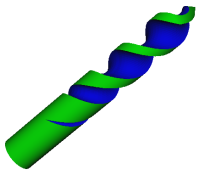

J. Ohlenburg and J. Müller.

Interactive CSG Trees Inside Complex Scenes.

In 16th IEEE Visualization 2005 (VIS 2005), 2005. PDF

J. Ohlenburg and J. Müller.

Interactive CSG Trees Inside Complex Scenes.

In 16th IEEE Visualization 2005 (VIS 2005), 2005. PDF

I. Lindt, J. Ohlenburg, U. Pankoke-Babatz, S. Ghellal, L. Oppermann, and M. Adams.

Designing Cross Media Games.

In 2nd International Workshop on Pervasive Gaming Applications (PerGames 2005), 2005. PDF

I. Lindt, J. Ohlenburg, U. Pankoke-Babatz, S. Ghellal, L. Oppermann, and M. Adams.

Designing Cross Media Games.

In 2nd International Workshop on Pervasive Gaming Applications (PerGames 2005), 2005. PDF

Abstract: Cross media games focus on a wide variety of gaming devices including traditional

media channels, game consoles as well as mobile and pervasive computing technology to allow for a

broad variety of game experiences.

This paper introduces cross media games. It addresses

challenges of cross media games and points out game design, technical, commercial and ethical

aspects. A gaming scenario illustrates cross media specific game elements and game mechanics.

Initial results gained in a paper-based version of the gaming scenario are presented.

The paper concludes with an outlook.

J. Ohlenburg, T. Fröhlich, and W. Broll.

Internal and External Scene Graphs: A New Approach for Flexible Distributed Render Engines.

In VR '05: Proceedings of the IEEE Virtual Reality 2005 (VR'05), 2005. PDF

J. Ohlenburg, T. Fröhlich, and W. Broll.

Internal and External Scene Graphs: A New Approach for Flexible Distributed Render Engines.

In VR '05: Proceedings of the IEEE Virtual Reality 2005 (VR'05), 2005. PDF

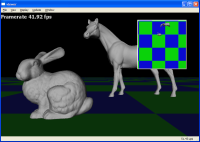

Abstract: Render engines or render APIs are a core part of each Virtual Reality

(VR) or Augmented Reality (AR) environment as well as 3D

games. Most existing approaches either focus on rendering speed

for high frame rates or on the presentation of advanced visual features.

However, no approach exists to integrate scene descriptions

based on multiple file formats without converting and thereby partly

destroying the native scene format.

In this paper we will present our approach of internal and external

scene graphs for realizing render engines. We will show how

this approach overcomes existing limitations, while still providing

decent frame rates and rendering features. By using internal scene

graphs for rendering, we use external scene graphs to store format

or application specific information, preserving its native structure

and content.

W. Broll, I. Lindt, J. Ohlenburg, and A. Linder.

A Framework for Realizing Multi-Modal VR and AR User Interfaces.

In Universal Access in Human Computer Interaction (HCI 2005), 2005. PDF

W. Broll, I. Lindt, J. Ohlenburg, and A. Linder.

A Framework for Realizing Multi-Modal VR and AR User Interfaces.

In Universal Access in Human Computer Interaction (HCI 2005), 2005. PDF

Abstract: The development of Virtual Reality (VR) and Augmented Reality (AR) applications

still is a cumbersome and error prone task. While 2D desktop applications have very clear standards

regarding user interfaces and a very limited set of common input devices, no standards for VR/AR

applications yet exist. Additionally a wide range of different 3D input devices exists.

In this paper we will present our framework for realizing multi-modal VR and AR user interfaces. This framework

does not rely on a single mechanism, but provides a set of three individual mechanisms complementing each other.

We use an interface markup language for defining cross-platform user interfaces, object behaviors for rapid VR/AR

interaction prototyping, and object-oriented scene graphs for realizing complex application scenarios.

2004

J. Ohlenburg, I. Herbst, I. Lindt, T. Fröhlich, and W. Broll.

The MORGAN Framework: Enabling Dynamic Multi-User AR and VR Projects.

In VRST '04: Proceedings of the ACM symposium on Virtual reality software and technology, pages 166-169, 2004. PDF

J. Ohlenburg, I. Herbst, I. Lindt, T. Fröhlich, and W. Broll.

The MORGAN Framework: Enabling Dynamic Multi-User AR and VR Projects.

In VRST '04: Proceedings of the ACM symposium on Virtual reality software and technology, pages 166-169, 2004. PDF

Abstract: The availability of a suitable framework is of vital importance for the development

of Augmented Reality (AR) and Virtual Reality (VR) projects. While features such as scalability,

platform independence, support of multiple users, distribution of components, and an efficient

and sophisticated rendering are the key requirements of current and future applications, existing

frameworks often address these issues only partially. In our paper we present MORGAN --- an extensible

component-based AR/VR framework, enabling sophisticated dynamic multi-user AR and VR projects. Core

components include the MORGAN API, providing developers access to various input devices, including

common tracking devices, as well as a modular render engine concept, allowing us to provide native

support for individual scene graph concepts. The MORGAN framework has already been successfully

deployed in several national and international research and development projects.

W. Broll, I. Lindt, J. Ohlenburg, M. Wittkämper, C. Yuan, T. Novotny, C. Mottram, A. Fatah, and A. Strothmann.

ARTHUR: A Collaborative Augmented Environment for Architectural Design and Urban Planning.

Virtual Reality and Broadcasting, 1(1), 2004. PDF

W. Broll, I. Lindt, J. Ohlenburg, M. Wittkämper, C. Yuan, T. Novotny, C. Mottram, A. Fatah, and A. Strothmann.

ARTHUR: A Collaborative Augmented Environment for Architectural Design and Urban Planning.

Virtual Reality and Broadcasting, 1(1), 2004. PDF

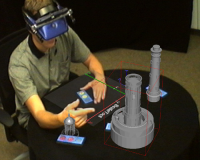

Abstract: Projects in the area of architectural design and urban planning typically engage

several architects as well as experts from other professions. While the design and review meetings

thus often involve a large number of cooperating participants, the actual design is still done

by the individuals in the time in between those meetings using desktop PCs and CAD applications.

A real collaborative approach to architectural design and urban planning is often limited to early

paper-based sketches.

In order to overcome these limitations, we designed and realized the

ARTHUR system, an Augmented Reality (AR) enhanced round table to support complex design and

planning decisions for architects. While AR has been applied to this area earlier, our approach

does not try to replace the use of CAD systems but rather integrates them seamlessly into the

collaborative AR environment. The approach is enhanced by intuitive interaction mechanisms that

can be easily configured for different application scenarios.

W. Broll, I. Lindt, J. Ohlenburg, M. Wittkämper, C. Yuan, T. Novotny, C. Mottram, A. Fatah, and A. Strothmann.

ARTHUR: A Collaborative Augmented Environment for Architectural Design and Urban Planning.

In Proceedings of HC 2004, 2004. PDF

W. Broll, I. Lindt, J. Ohlenburg, M. Wittkämper, C. Yuan, T. Novotny, C. Mottram, A. Fatah, and A. Strothmann.

ARTHUR: A Collaborative Augmented Environment for Architectural Design and Urban Planning.

In Proceedings of HC 2004, 2004. PDF

Abstract: Projects in the area of architectural design and urban

planning typically engage several architects as well as

experts from other professions. While the design and

review meetings thus often involve a large number of

cooperating participants, the actual design is still done by

the individuals in the time between those meetings using

desktop PCs and CAD applications. A real collaborative

approach to architectural design and urban planning is

often limited to early paper-based sketches.

In order to overcome these limitations we designed and

realized the Augmented Round Table, a new approach to

support complex design and planning decisions for

architects. While AR has been applied to this area earlier,

our approach does not try to replace the use of CAD

systems but rather integrates them seamlessly into the

collaborative AR environment. The approach is enhanced

by intuitive interaction mechanisms that can be easily

configured for different application scenarios.

W. Broll, S. Grünvogel, I. Herbst, I. Lindt, M. Maercker, J. Ohlenburg, and M. Wittkämper.

Interactive Props and Choreography Planning with the Mixed Reality Stage.

In Proceedings of ICEC 2004, 2004. PDF

W. Broll, S. Grünvogel, I. Herbst, I. Lindt, M. Maercker, J. Ohlenburg, and M. Wittkämper.

Interactive Props and Choreography Planning with the Mixed Reality Stage.

In Proceedings of ICEC 2004, 2004. PDF

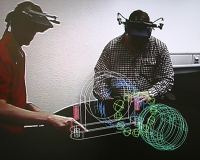

Abstract: This paper introduces-the Mixed Reality Stage-an interactive Mixed Reality environment

for collaborative planning of stage shows and events. The Mixed Reality Stage combines the presence of

reality with the flexibility of virtuality to form an intuitive and efficient planning tool. The planning

environment is based on a physical miniature stage enriched with computer-generated props and characters.

Users may load virtual models from a Virtual Menu, arrange those using Tangible Units or employ more sophisticated

functionality in the form of special Tools. A major feature of the Mixed Reality stage is the planning of

choreographies for virtual characters. Animation paths may be recorded and walking styles may be defined in

a straightforward way. The planning results are recorded and may be played back at any time. User tests have

been conducted that demonstrate the viability of the Mixed Reality Stage.

J. Ohlenburg.

Improving Collision Detection in Distributed Virtual Environments by Adaptive Collision Prediction Tracking.

In VR '04: Proceedings of the IEEE Virtual Reality 2004 (VR'04), 2004. PDF

J. Ohlenburg.

Improving Collision Detection in Distributed Virtual Environments by Adaptive Collision Prediction Tracking.

In VR '04: Proceedings of the IEEE Virtual Reality 2004 (VR'04), 2004. PDF

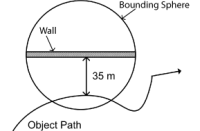

Abstract: Collision detection for dynamic objects in distributed virtual environments is still an

open research topic. The problems of network latency and available network bandwidth prevent exact common

solutions. The Consistency-Throughput Tradeoff states that a distributed virtual environment cannot be

consistent and highly dynamic at the same time. Remote object visualization is used to extrapolate and

predict the movement of remote objects reducing the bandwidth required for good approximations of the

remote objects. Few update messages aggravate the effect of network latency for collision detection.

In this paper new approaches extending remote object visualization techniques will be demonstrated to

improve the results of collision detection in distributed virtual environments. We will show how this

can significantly reduce the approximation errors caused by remote object visualization techniques.

This is done by predicting collisions between remote objects and adaptively changing the parameters

of these techniques.

Last modification: Tuesday, 28. January 2014